As the internet continues to become increasingly ingrained in our daily lives, concerns over the security of data, communications, commerce, and more have never been greater. Despite the extensive measures to prevent attacks—from intensive user authentication, to firewalls, to antivirus and malware software—hackers are still finding new ways inflict damage to organizations’ networks.

A variety of network intrusion detection systems (NIDSs) have been deployed to thwart would-be attackers, but with their continued development, a critical paradox has arisen: as NIDSs accumulate more features from training datasets—examples of past attack patterns and models of normal behavior that enable them to pinpoint anomalous activity—their detection capabilities can become slowed down by the bloated training process.

A recent machine learning (ML)-based scheme from a team of IEEE researchers is showing some promise in solving this paradox, with a novel multi-stage approach that the team claims can reduce computational complexity while maintaining detection ability—enabling greater overall performance.

“The proposed novel multi-stage network intrusion detection framework reduces the overall computational complexity of the deployed machine learning model—by reducing the number of features as well as the number of training samples the model needs—while enhancing its detection performance in terms of network attack detection accuracy,” said Abdallah Shami, senior member, IEEE, and professor with the ECE Department at Western University in London, Ontario, Canada. “Additionally, it accounts for a common problem facing public cybersecurity datasets (i.e., the imbalanced nature of the datasets) by applying an oversampling technique to provide more network attack samples to better learn attack patterns/characteristics.”

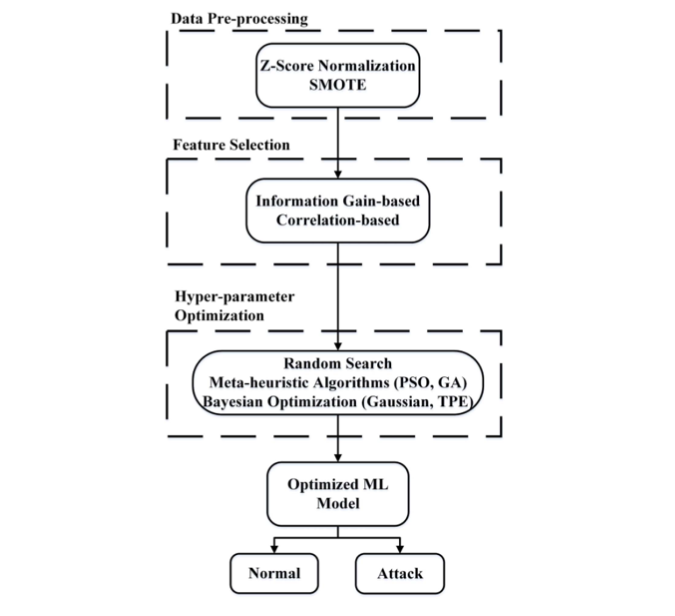

The paper extends the team’s previous work on ML-based NIDS frameworks. The new framework is divided into three stages. The first pre-processes the data with Z-score normalization and uses a synthetic minority oversampling technique (SMOTE) to improve the performance of the training model. The second stage employs a feature selection process to hone down the ML classification model to the minimum number of features, which reduces the time complexity of the model, lowering its training time while still meeting performance metrics. The third stage of the framework optimizes the hyper-parameters of the different ML classification models considered. This works by investigating three different hyper-parameter tuning/optimization models: random search; meta-heuristic optimization algorithms, which include particle swarm optimization and genetic algorithm; and Bayesian Optimization algorithm.

The results of these optimization stages are combined to into the optimized ML classification model for the enhanced NIDS system, which can more efficiently classify new instances as either normal behavior or attacks.

Proposed Multi-stage Optimized ML-based NIDS Framework.

The paper provides a detailed look at previous iterations of ML-based NIDS work and outlines their shortcomings before exploring the mathematical backgrounds behind the different deployed techniques. The researchers then go on to present their new framework, describe the datasets under consideration, and finally, discuss the experimental results of the scheme.

According to the authors, their multi-stage ML-based NIDS framework was able to reduce the required training sample size by as much as 74% and the feature set size by up to 50%. In addition, the model performance was enhanced with hyper-parameter optimization with detection accuracies over 99% for both datasets, outperforming recent literature works by 1-2% higher accuracy and 1-2% lower false alarm rate.

“The proposed novel multi-stage network intrusion detection framework is a comprehensive framework to optimize the entire machine learning pipeline starting from feature selection all the way through to the model training and testing stages,” Shami said. “It shows prominent and promising results in terms of performance—having high network attack detection accuracy and low computational complexity—and generality (being applicable to different data types/datasets as illustrated by the testing on multiple state-of-the-art cybersecurity datasets). Accordingly, the proposed framework highlights the need for and the benefit of considering/optimizing the whole pipeline rather than just the machine learning model.”

View the full-text article on IEEE Xplore. Read the first page for free. Full article available with purchase or subscription. Contact us to see if your organization qualifies for a free trial.

Interested in expanding your knowledge in Machine Learning and Cybersecurity? IEEE offers continuing education course programs on the topic of Machine Learning: Predictive Analysis for Business Decisions, along with the recently launched Wiley Data and Cybersecurity eBooks Library.

IEEE also offers the IEEE | IAPP Data Privacy Engineering Collection, delivering the most critical training, resources, and content for engineers and technology professionals tasked with understanding, maintaining, and protecting data privacy.