Ultrasound imaging is currently one of the most used diagnostic procedures in the healthcare field. The technology plays a critical role in timely diagnosis, disease management and treatment planning across many medical specialties, from cardiology to obstetrics.

However, despite advances in the field – such as more compact and portable ultrasound probes– ultrasound image quality and overall diagnostic performance still can’t compete with more expensive and bulkier modalities, such as MRI and CT machines. Additionally, the vast amounts of data generated from 3D systems and high-frame-rate imaging schemes have become challenging on the probe-system communication and image reconstruction algorithms. As a result, the potential to democratize imaging has been limited. To overcome these obstacles, researchers have developed a deep learning approach that could revolutionize many aspects of ultrasound imaging and signal reconstruction, from the front-end to advanced applications.

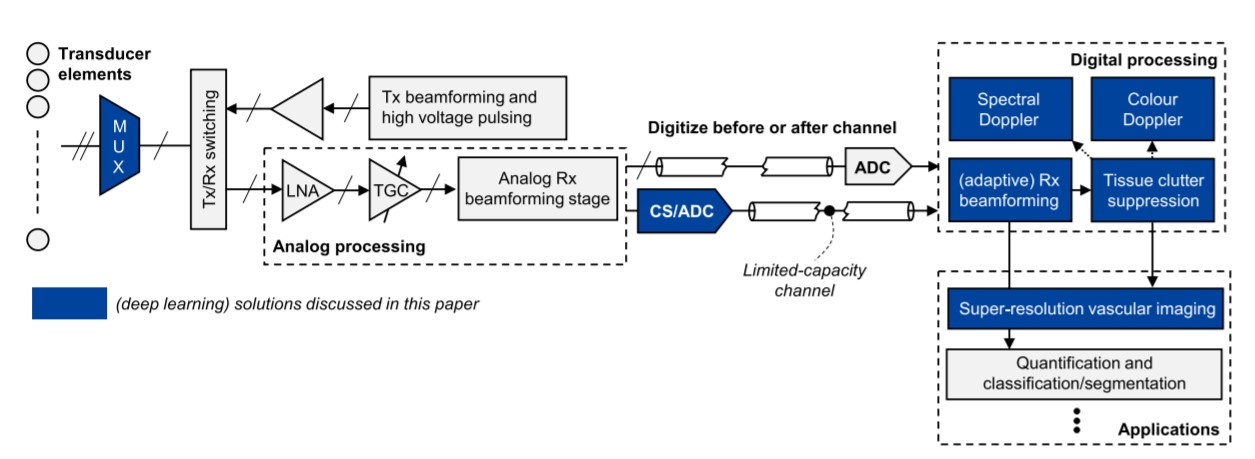

In the last decade, artificial intelligence (AI) has undergone impressive growth through the advent of deep learning (DL), and what started in computer vision and language modelling has evolved to image reconstruction. Today – as shown with the team’s approach in Figure 1 – DL and AI systems can be leveraged to improve imaging resolution and contrast, adequately suppress clutter, enhance spectral estimation, and even give practitioners the ability to make actionable decisions from massive amounts of data.

By leveraging DL and data-driven AI models, the team designed several independent building blocks using trained artificial agents and neural signal processors. All aimed at enhancing the processing capabilities of ultrasounds.

Figure 1: An overview of the ultrasound imaging chain with the team’s deep learning solutions

“Ultrasound imaging can take a big step forward by leveraging AI systems across their entire processing chains, using knowledge learned from data to improve all aspects of the signal processing pipeline, from the raw sensor data to the images and their downstream interpretation,” said Ruud J. G. van Sloun, an assistant professor at the Eindhoven University of Technology in The Netherlands. “[The] potential impact of achieving very-high-quality ultrasound imaging through AI with compact probes is enormous, both for developing and developed countries.”

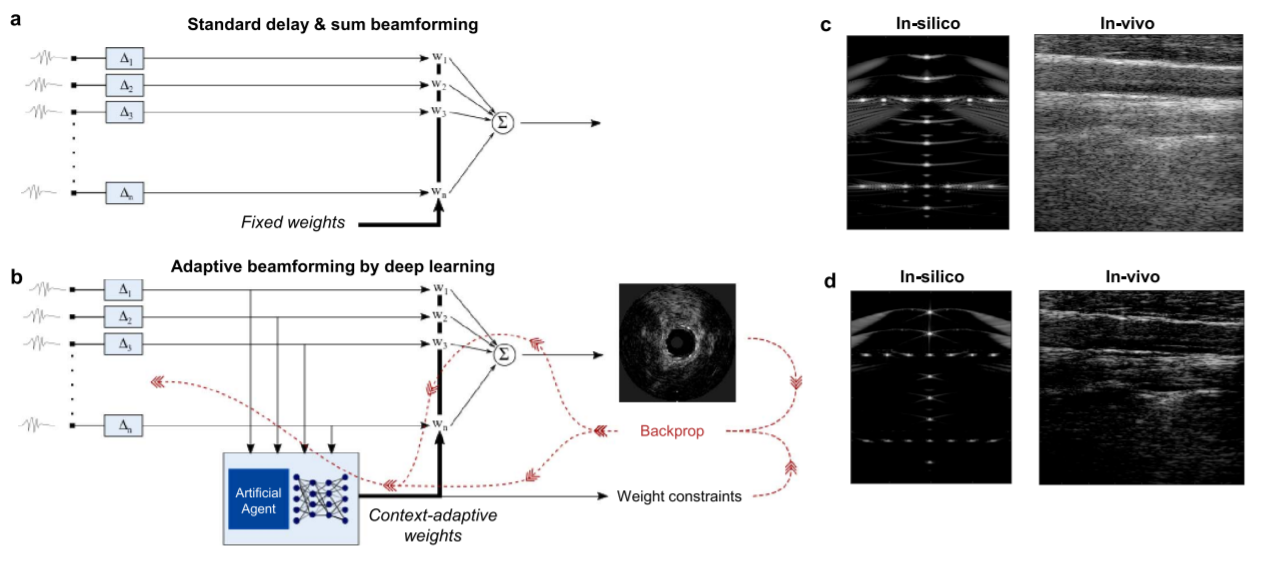

Today, delay-and-sum (DAS) is the most widespread beamformer – a signal processing technique used to transmit radio signals in a specific direction – in ultrasound imaging. Its simple functionality has made it the industry standard for real-time applications, but it comes with disadvantages. The technique, which uses aperture shading to increase image contrast, has come at the expense of resolution and reconstruction quality. In contrast, adaptive beamformers, which perform spatial signal processing with an array of transmitters, have shown significant improvements in image quality.

In fact, the team demonstrated the effectiveness of adaptive beamforming with a deep network serving as the artificial agent on plane-wave ultrasound acquisitions. As outlined in Figure 2, the team’s approach visually reduced clutter, enhanced tissue contrast, yielded a slightly elevated contrast-to-noise ratio (CNR) along with significantly improving resolution.

Figure 2: Flow charts of standard delay-and-sum beamforming (a), adaptive beamforming by deep learning (b), and illustrative reconstructed images in-silico and in-vivo for both methods (c and d)

“[Our] solutions also enable new applications such as [deep] ultrasound localization microscopy [ULM] to operate in regimes that make translation into clinics more accessible,” said Ruud. This means “faster and more precise detection of higher concentrations of contrast agents to cover the vascular bed more time-efficiently.”

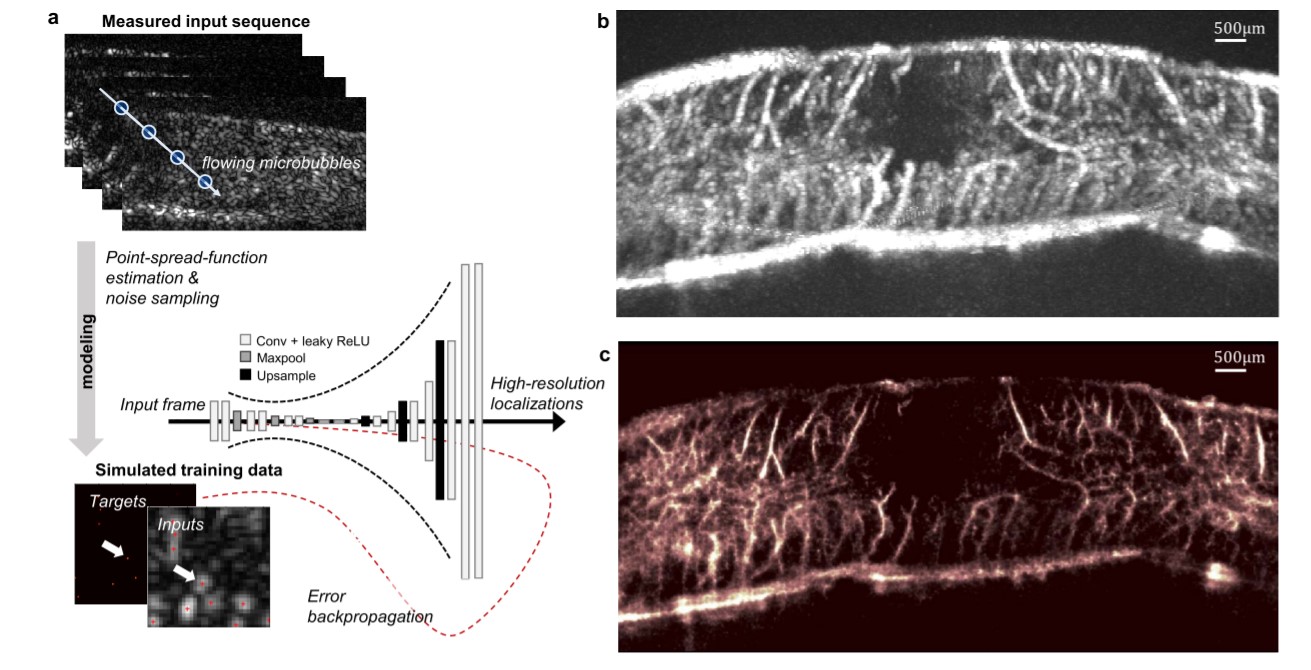

The introduction of ULM has increased penetration depth and enabled deep and high-resolution microvascular imaging across numerous imaging frames. The team’s data-driven deep-ULM approach was tested on a rat’s spinal cord, as seen in Figure 3. Deep-ULM harnesses a fully convolutional neural network to map a low-resolution input image – containing overlapping microbubble signals – to a high-resolution sparse output image, in which the pixel intensities reflect recovered backscatter levels.

As illustrated in Figure 3, the deep-ULM reconstruction of the rat’s spinal cord achieved a significantly higher resolution and contrast than the standard maximum intensity projection image.

Figure 3: Fast ULM through deep learning using a convolutional neural network (a), standard maximum intensity projection across a sequence of frames for a rat’s spinal cord (b), and corresponding deep-ULM reconstruction (c)

Ruud and his team have only begun to scratch the surface of what deep learning can offer in ultrasound diagnostics. By incorporating smart devices that are continuously learning, next-generation imaging could deliver more cost-effective, highly portable, and improved images. Perhaps most importantly, deep learning has shown a clear path to accessible and accurate diagnostics.

As a next step, the team is looking into an autonomous AI-ultrasound system that actively seeks to maximize patient information through intelligent – and personalized – acquisition and processing of sensor data.

For more information on deep learning and image reconstruction, visit the IEEE Xplore digital library.