The adoption of facial recognition software gets a lot of attention these days, but could facial expression detection take this technology a step further?

Recent tests conducted at the Florida Institute of Technology and Brunel University, which examined the first facial expression recognition system suitable for smart devices, proved 96 percent accurate. Further refinement could pave the way for various uses of this technology such as detecting a post-surgery patient’s pain levels, identifying suspicious people at airports, or analyzing a criminal’s expressions during interrogation.

Facial expression recognition isn’t new, but previous research only analyzed basic feature descriptors and classification methods such as the Gabor descriptor, a linear filter used for texture analysis. By contrast, the global team’s research looked to a relatively new feature descriptor, called the facial landmark descriptor, and tested it for the first time on a mobile phone platform.

Facial landmarks are points on specific parts of the facial image (68 in total), used to indicate the position of facial muscles and tracked for movement over time. For example, the eyebrows are a facial landmark that, when tracked, show their rise or fall, which can help indicate whether a person is scowling or shocked.

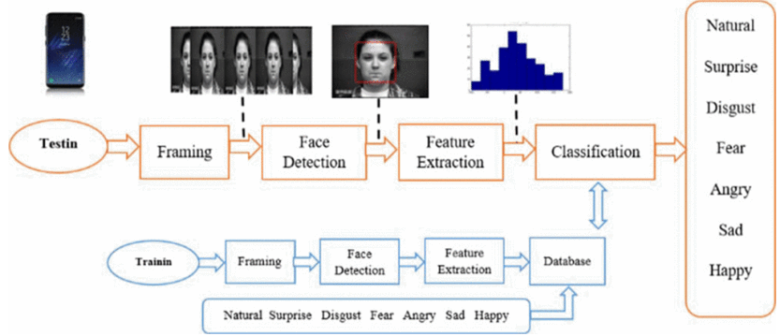

To begin the process, a user takes a picture or video of someone’s face, in the smartphone application for facial expression detection. This is depicted in the “framing” and “face detection” components of the image below. From there, feature extraction, with the help of facial landmarks, takes over.

Figure 1: Structure overview of the facial expression recognition system

In case the camera does not capture the face straight on, the system automatically adjusts the image to ensure the facial angles line-up with the facial landmarks by calculating the “center of gravity” through extensive formulas.

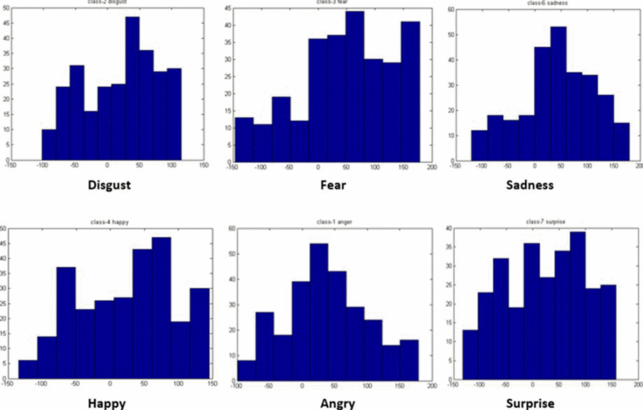

After the facial features have been extracted, a support vector machine (SVM) classification method works to identify the facial expressions extracted by the facial landmark descriptor, which is optimized for delineation problems such as this. The SVM classification can identify a specific facial expression in less than two seconds. The seven most common emotions are analyzed (happiness, sadness, anger, fear, disgust, surprise and neutrality), and each creates a unique density graph, depicted in Figure 3.

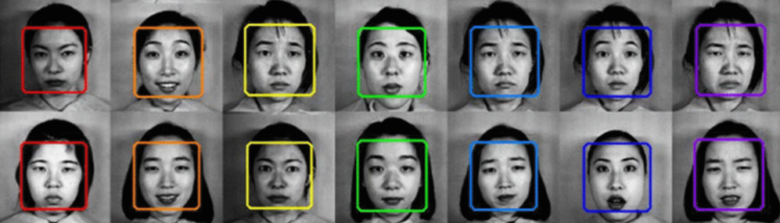

The researchers tested their feature expression recognition system by using a public dataset of images – CK+ Facial Expression, JAFFE and KDEF, similar to the image below. These datasets were used intentionally as they included images of people across various ethnicities. Combined, these datasets tested nearly 2,000 different images for facial expression recognition and accurately identified 96.3%.

Figure 2: Facial expression dataset: Red is angry, happy is orange, fear is yellow, neutral is green, sad is blue, surprise is indigo, and disgust is purple.

After testing, the researchers plot a histogram of the findings (Figure 3), which graphically depicts the excess or lack of certain ‘elements’ in each of the seven facial expressions analyzed. This information is stored on the smartphone application to inform future facial expression analyses, and ultimately used to train the system continuously through artificial intelligence.

Figure 3: Facial landmarks histogram

Though initial results look promising, Humaid Alshamsi, researcher at the Florida Institute of Technology, hopes to increase the accuracy through improving the algorithm. In addition, Alshamsi looks next to cloud computing.

“Next steps are to integrate this system with the cloud–not only making it easier to run the application anywhere, and at any time, but to increase image storage to ensure the system is constantly being trained through new images,” said Alshamsi. “This is the first system of its kind to run on a smartphone, and we will continue to find ways to improve upon it.”

For more information on facial expression recognition, visit IEEE Xplore.