Autonomous vehicles have gone from hype to reality in recent years, and many companies are now looking to hop on the driverless bandwagon. However, most of today’s auto market is still comprised of legacy vehicles that are unable to handle the high-end computational hardware and software needed in self-driving cars while maintaining reliability and overall performance. Now, a global research team has developed an efficient and economical deep-learning model for autonomous driving that it says can be implemented in most types of vehicles.

According to Khan Muhammad, a researcher at Sejong University in Seoul, his team’s deep model design can be used to facilitate a car’s perception of the surrounding environment or within intelligent transportation systems to improve traffic management. It can detect various traffic signs and road obstacles, such as pedestrians, and measure the distance to each one. It can also maneuver a car in real-time using a single board computer (Raspberry Pi) as a ground processing unit for autonomous driving.

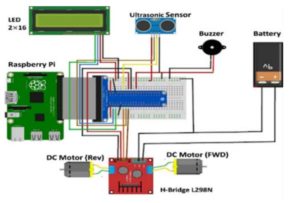

With a three-part design system consisting of input units, camera calibration, and output units, the team was able to incorporate vision and ultrasonic sensors that work together to collect data from the surrounding environment in real time. Specifically, the ultrasonic unit detects and measures obstacles. The unit sounds a buzzer or horn when the car encounters an obstacle and flashes a warning sign on the LED that reads “Obstacle Detected”.

As part of the output unit, the LED can also display important information, including the IP address of the Raspberry Pi, and actions like “left turn”, “right turn”, “go straight”, and “stop”. Figure 1 illustrates the assembly of all hardware sensors in detail.

Figure 1: Hardware assembly of the self-driving car

To test the effectiveness of their proposed solution, the team created a track that carried out multiple driving scenarios. As outlined in figure 2, using the system design in place, the model car was able to travel on the track and use the ultrasonic sensor to detect upcoming obstacles. The sensor then measured the distance between the vehicle and obstacle to determine if the voltage supply to the wheels needed to be cut off to stop the car. The team also leveraged vision sensors using four Haar cascade classifiers to read traffic signs. With this data, each wheel on the car could be controlled to assess next steps.

Figure 2: The self-driving car on the team’s pre-designed track with traffic signs

So how does this all come together?

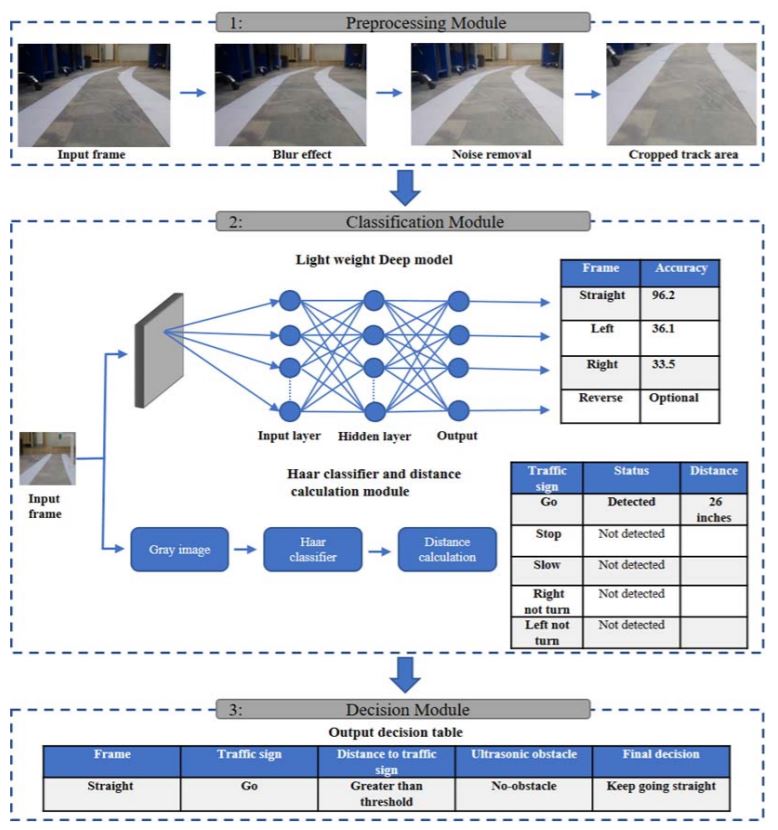

Each unit within the proposed system is linked to a module and has a role to play. This is outlined in figure 3.

In the pre-processing module, images taken by the vision sensors are edited and altered to enhance the frame and remove noise caused by lighting conditions or movements from the car. The final result is a cropped frame of the track region that is passed on to the classification module for further evaluation. With a neural network and traffic sign detection capabilities, the classification module determines what action the car should take – go, stop, or slow down – based on the frame from the previous module. Once an array of possibilities is determined, a decision is made in the final module. This is where the output unit in the system kicks in to control the motors in the car and determine a direction. From there, autonomous driving is achieved.

Figure 3: Detailed overview of the proposed framework.

“Our goal was to design an economical autonomous solution that helps a current intelligent transport and driver assistant system,” said Muhammad. “Our system can be used as part of semi-autonomous systems to take control of the car to avoid obstacles when a driver is unable to pay attention when driving.”

With more than 1.25 million deaths worldwide as a result of driving accidents annually, the race to minimize fatalities and create a safer, more enhanced driving environment increases each year. As a next step, the team is focused on designing efficient reinforcement learning algorithms that use the virtual environment to improve the autonomous car’s performance.

For more information on autonomous vehicles or intelligent transportation, visit the IEEE Xplore Digital Library.