As industries face increased pressure to meet ever-changing market demands – such as mass customization, greater product availability, and faster production cycles – companies are looking for new ways to increase collaboration between robots and their human operators. A transformation is underway, and a team at Rice University in Houston, Texas, believes augmented reality (AR) could be part of the solution.

Robots are already becoming essential to the manufacturing industry, where they support human operators in completing high-risk or monotonous tasks, create efficiencies along the supply chain, and increase productivity, all with the goal of building a more autonomous and competitive system. To reach the next level of collaboration, the Rice University team developed a solution that increases a robot’s autonomy using AR to request, preview, and approve or modify robot actions.

Using an AR interface, the approach leverages a robot’s decision-making capabilities – through its motion planner – and focuses on controlling the desired outcomes of a robot’s actions rather than each of the steps needed to complete tasks. To do this, users program a sequence of high-level goals or “High-level Augmented Reality Specifications” (HARS), then allow the robot to decide what monitions are required to achieve the goals.

Common practices found in current industrial settings typically involve low-level specifications or coding by a user. This solution deprioritizes low-level requests, such as how a robot navigates towards an object, picks it up and places it in a given location, and instead focuses on what the user wants to accomplish, giving the robot the ability to consider and evaluate a wider range of scenarios to complete the goal.

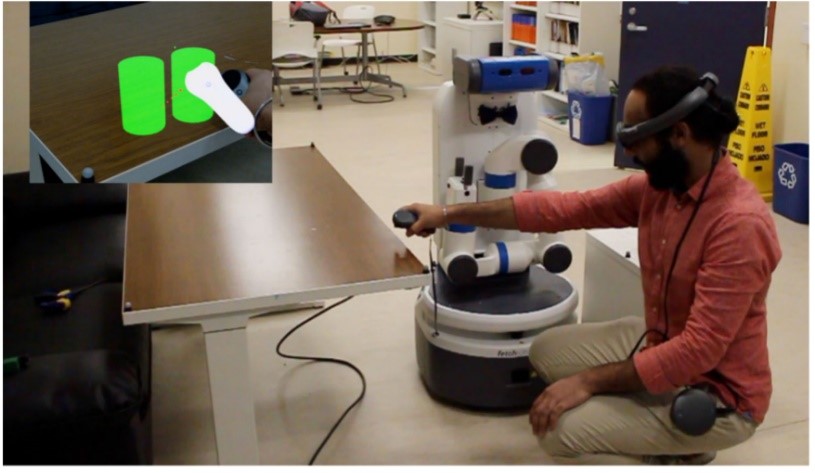

So how is this done? As figure 1 illustrates below, a human operator uses the AR interface to manipulate virtual objects and determine what it wants from the robot. The virtual objects represent their real-life counterparts, so the robot can understand its task and the desired outcome, then use its decision-making functionalities to take the necessary actions to complete it. For instance, placing two virtual cans on a table instructs the robot to execute the same action in the real world.

Figure 1: A user places two virtual cans in a desired location so the robot can record, plan and execute it in the real world

When the initial HARS is received and the robot generates a course of action, a virtual preview of that plan is displayed to the user. Depending on the approach, the user can either approve of the plan, reprogram the robot to find an alternative path or abort it entirely. This feature gives users the ability to provide various levels of supervision over the robot’s assignment.

“Our work introduces a new approach that marks a shift in human-robot collaboration,” said Juan David Hernández, a postdoctoral fellow at the Computer Science Department at Rice University, who will be joining Cardiff University in December 2020. “Humans can collaborate with a robotic partner in a way that is not only more efficient, but also more natural and effortless.” According to Juan, this approach also allows robots to take charge of specific sub-tasks without requiring continuous human intervention.

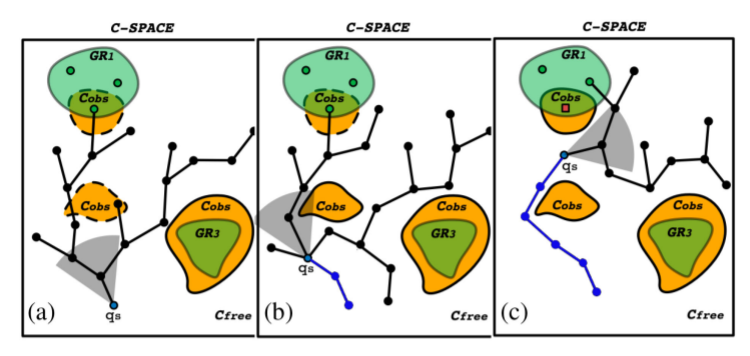

For more complex tasks, where information of the physical world is missing due to changes in the environment or obstacles in the way, the team put fast re-planning functionalities into place, allowing the robot to continuously plan its course and achieve its goal. Figure 2 outlines this capability.

Figure 2: Online re-planning in a partially known environment. (a) The possible paths a robot can take to its goal (green), while factoring in identified (orange) obstacles to avoid. (b) Onboard sensors can detect unidentified (dashed line) obstacles and remove them from the robot’s original plan. (c) As the robot approaches the goal, it continuously re-plans as more obstacles are put into its path. The traveled path is shown in blue.

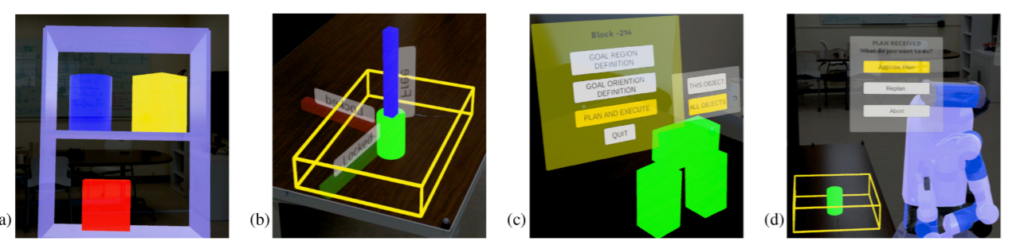

Figure 3 demonstrates the team’s entire solution. This includes identifying the virtual object through the AR interface, manipulating it to define the desired task and previewing the robot’s plan before execution. Not only can this solution be implemented for single-object scenarios, but also multi-object requests that include stacking or re-arranging multiple objects. To achieve the latter, a sequence of HARS can be defined so the robot can keep track of the order in which each virtual object was manipulated by the user.

Figure 3: The AR interface. (a) Inventory of objects. (b) The user’s command process in the virtual world. (c) The sequence of HARS for multiple objects. (d) The virtualized motions of the robot

Notably, not only can this approach be used in various workplace scenarios, but it can support workers with different types of mobility impairments. A robot can be programmed to handle different objects or deliver them to a person that might require them under such limitations.

“We consider this work to be the first step towards a better collaboration between humans and robots,” said Lydia Kavraki, a professor and director at Rice University. This approach also lays the foundation for developing new solutions that integrate AR technologies and planning for human-robot collaboration applications, she said.

Juan noted the team is already considering new applications for this technology. Going beyond efficiency improvements in collaborative tasks, the team is hoping to assess the combination of their proposed approach with other interaction modalities, such as speech and gestures.

For more information on augmented reality and autonomous robots, visit the IEEE Xplore digital library.