For the last decade, the autonomous vehicle revolution has hovered just beyond the horizon. In 2012, Google co-founder Sergey Brin promised that the company’s self-driving cars would be available for everyone within five years. Another five years hence, and the dream of fully automated travel continues to elude the general public.

The primary reason for this is safety. The artificial intelligence behind the computer visual sensing and perception systems for vehicles continues to improve, implementing new advances in deep learning, but these systems remain inferior to their biological counterpart: the human eye. Indeed, even the eyes of small insects like bees outperform the most advanced artificial vision systems in typical functions such as real-time sensing and processing and low-latency motion control.

A new paper from IEEE researchers outlines a new technology that aims to bridge this gap by implementing bio-inspired elements into sensing technology. Still largely unexplored by the automotive industry, event-based neuromorphic vision sensors operate on an entirely different set of principles from traditional sensors, such as CMOS cameras. By mimicking the biological retina from both the system and element level, this new technology—coupled with radar, lidar, and ultrasound systems—could be the key to reaching the safety threshold to enable autonomous transportation’s widespread adoption.

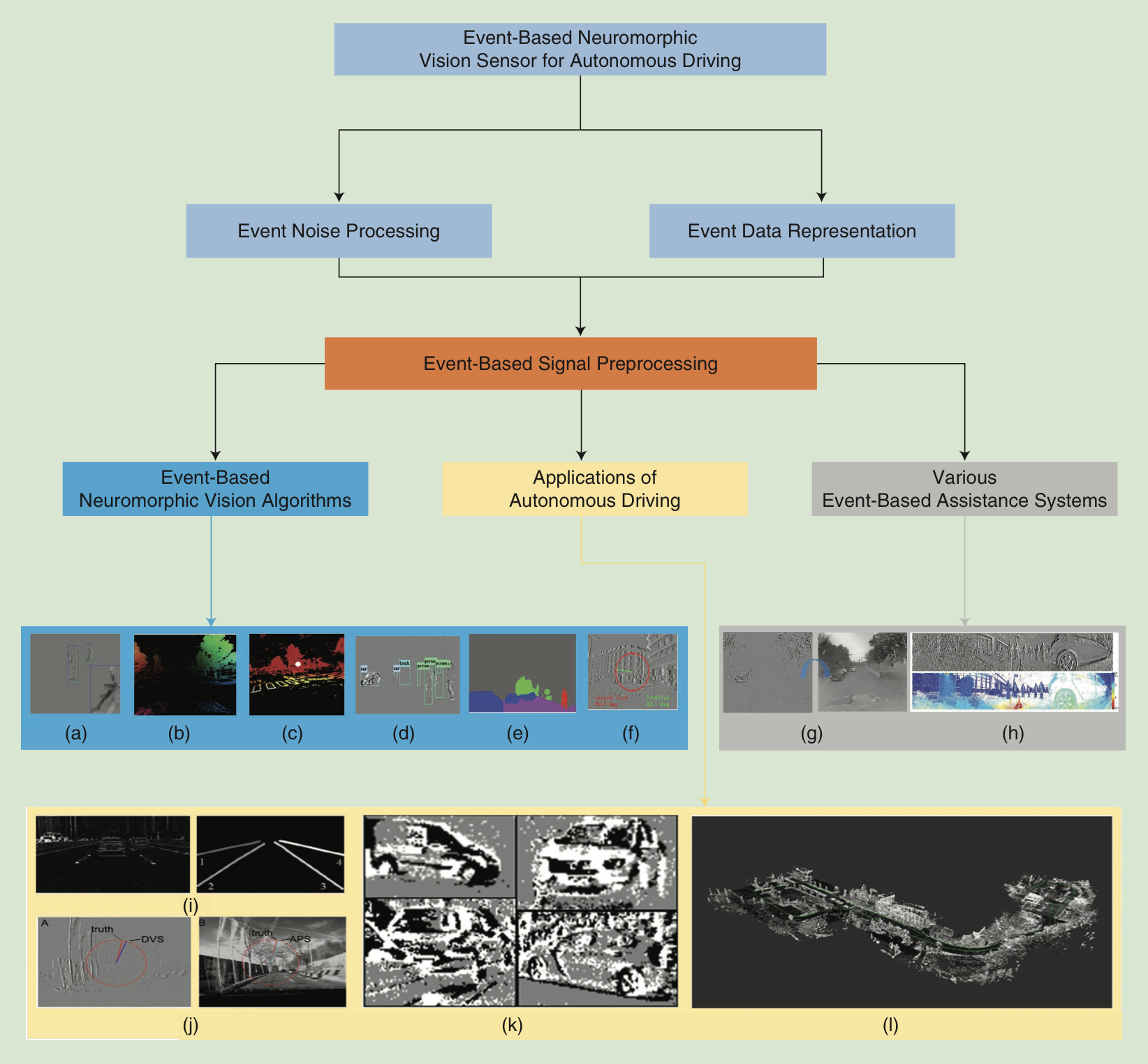

An overview of event-based neuromorphic vision sensors for autonomous driving, with representative examples for emerging systems and ap- plications: (a) tracking (adapted from [22]), (b) optical flow (adapted from [29]), (c) depth estimation (adapted from [29]), (d) object detection (adapted from [52]), (e) semantic segmentation (adapted from [35]), (f) steering prediction (adapted from [3]), (g) image reconstruction (IR) (adapted from [45]), (h) panoramic stereo vision (adapted from [47]), (i) DET data set (adapted from [16]), (j) DDD17 data set [18] (adapted from [3]), (k) N-Cars data set (adapted from [17]), and (l) MVSEC data set (adapted from [4]).“Neuromorphic vision sensors hold the potential for unparalleled energy efficiency, small bandwidth output, and lower-cost production,” said Alois Knoll, senior IEEE member and professor in the department of informatics at the Technical University of Munich. “With neuromorphic vision sensors, we are getting closer than ever to approaching retina-like vision and human-like driving for autonomous vehicles.”

Event-based neuromorphic vision sensors enable lower power and latency than traditional cameras, since each pixel works independently, eliminating the need for global exposure of the frame. Sensors such as DVS can enable twice the dynamic range of frame-based cameras, adapting to very dark and bright stimuli for scenarios such as entering and emerging from a tunnel. Further, analog circuitry enables brightness changes to be captured very quickly; with a 1MHz clock, events can be detected and timestamped with microsecond resolution. Finally, event-based neuromorphic vision sensors can capture dynamic motion precisely without motion blur, a common issue for traditional sensors.

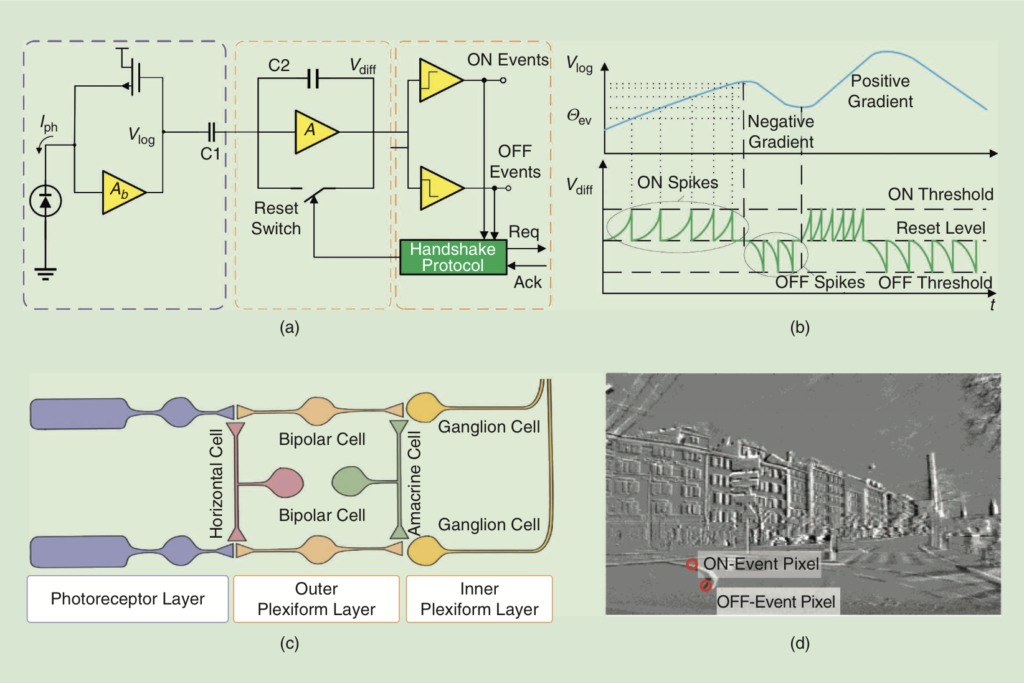

A practicable silicon retina DVS based on biological principles (adapted from [53]): (a) DVS pixel circuitry, (b) typical signals of the pixel circuits, (c) a three-layer model of a human retina, and (d) the accumulated events from a DVS. The accumulated event map has ON event (illumination increased) and OFF event (illumination decreased) drawn as white and black dots.

The paper, which to date has been cited 54 times, aims to provide a foundation for other researchers to build upon and further develop this groundbreaking technology. The authors begin by outlining the derivation of neuromorphic vision sensors from their biological counterparts in the retina, then discuss in depth the physics of signal processing techniques behind the technology. From there, the paper reviews the signal processing algorithms and applications for event-based neuromorphic vision in autonomous vehicles and driver assistance systems. The work culminates with an outline of future research directions for the technology.

“Neuromorphic vision sensors pose a paradigm shift to sense and perceive the world for autonomous vehicles, which is however almost undiscovered by the automotive industry,” Knoll said. “In this tutorial-like paper, we aim to build a bridge between the neuroscience and autonomous driving research communities.”

View the full-text article on IEEE Xplore. Read the first page for free. Full article available with purchase or subscription. Contact us to see if your organization qualifies for a free trial.

Interested in expanding your knowledge in Autonomous Vehicles? IEEE offers continuing education with the IEEE Guide to Autonomous Vehicle course program.